EnCharge AI reimagines computing to meet needs of cutting-edge AI

By Alaina O’Regan, Office of the Dean for Research

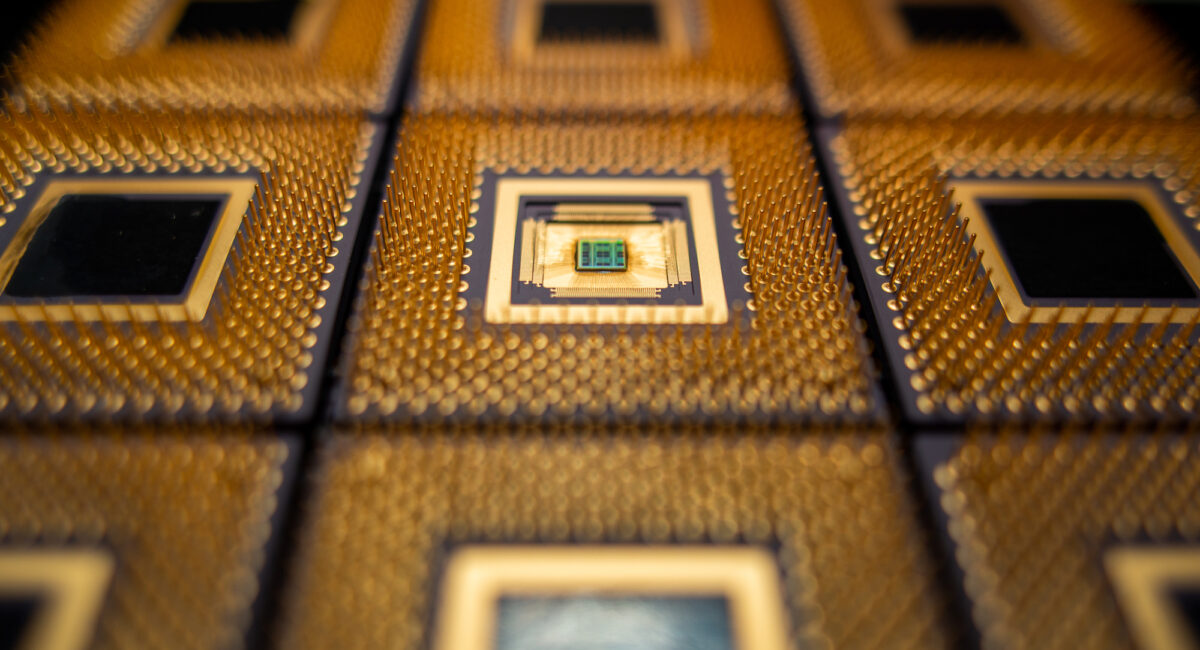

A startup based on Princeton research is rethinking the computer chip with a design that increases performance, efficiency and capability to match the computational needs of technologies that use artificial intelligence (AI). Using a technique called in-memory computing, the new design can store data and run computation all in the same place on the chip, drastically reducing cost, time and energy consumption in AI computations.

AI has the potential to solve some of the world’s most challenging problems. But the computational power needed to run the most advanced AI algorithms is rapidly exceeding the capabilities of today’s computers.

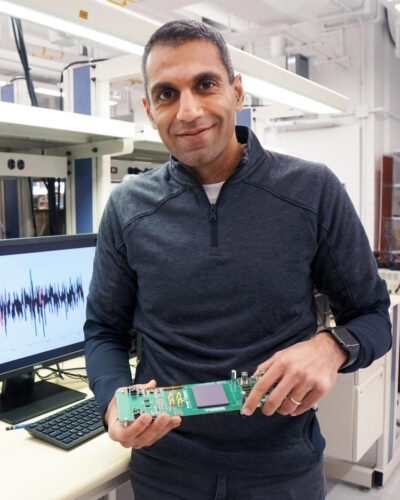

“EnCharge AI is unlocking the incredible capability that artificial intelligence can deliver by providing a platform that can match its computing needs, and performing AI computations close to where the data in the real world is being generated,” said company founder Naveen Verma, professor of electrical and computer engineering, associated faculty at the Andlinger Center for Energy and the Environment, and director of the Keller Center for Innovation in Engineering Education at Princeton University.

Modern AI requires immense computing capability, and works by shipping data off to huge data centers in the cloud, where algorithms process the data and then send the results back to the user. But at the cutting edge of the field where the volume of data is immense, the transfer to and from the cloud can be very expensive, take too long, or not even be feasible, said Verma, who is also the director of Princeton’s programs in Entrepreneurship and Technology and Society.

To skip the costly and time-consuming step of exchanging data with the cloud, the EnCharge AI team designed a new kind of computer chip that allows AI to run locally, directly on the device. But because these devices are much smaller in size compared to data centers in the cloud, they must run with much higher efficiency. That’s where in-memory computing comes in.

“We find ourselves in a position where we’re really rethinking the fundamentals of computing, from the basic devices to the overall architectures,” Verma said. “That’s an exciting thing for researchers, and that’s been a big focus for my group for many years.”

To achieve this, the EnCharge AI team designed their chip using highly precise circuits that can be considerably smaller and more energy-efficient than today’s digital computing engines, allowing the circuits to be integrated within the chip’s memory. This in-memory approach provides the high efficiency needed for modern AI-driven applications.

“EnCharge AI’s technology, based on the work of Naveen and his graduate students, is truly innovative and potentially transformative in enabling widespread use of AI,” said Andrea Goldsmith, dean of the School of Engineering and Applied Science and Arthur LeGrand Doty Professor of Electrical Engineering and member of the advisory board for EnCharge AI.

“This has the potential to change the way we use computing in our daily lives,” said Murat Ozatay, a former graduate student in Verma’s lab and now a key engineer with EnCharge AI. “Our ultimate goal is to build the best AI chip in terms of power efficiency and performance.”

Ozatay said that working on research that led to a startup has given him new perspective on how research can be impactful outside of the lab. “I think seeing this kind of thing drives other students to want to build something new,” he said.

Verma said that some of the most exciting applications for their chip lie in automation, which involves taking complex physical tasks and automating them in a way that leverages or expands the capability of humans.

The chip could cut costs and improve performance for robots in large-scale warehouses, in retail automation such as self-checkout, safety and security operations, and in drones for delivery and industrial purposes. The chips are programmable, capable of working with different types of AI algorithms, and scalable, enabling applications to grow in complexity.

“This is all possible through a user interface that’s seamless,” Verma said. “For AI innovators, the chip fits into your life easily without disrupting your ability to innovate.”

“This is a challenging field for investors and others in the innovation sphere to jump into and engage in. There are a range of technologies that try to break away from the limitations in ways conventional computers do things — you need to put in the research and work with folks to help them understand which technologies will and won’t work, and why,” Verma said, noting that the startup has raised $21.7 million from investors in its first round of financing.

Verma said that universities play an important role in driving innovation in new and differentiated technologies. “Through the deep research we can do at universities, we can rigorously build out these technologies to maturity, to move with solid footing towards real-world applications,” Verma said. “Of course, this requires that universities give faculty the time and flexibility to do this, in the way that Princeton has for me.”

Goldsmith, who is on Biden’s Presidential Council of Advisors for Science and Technology, said that investing in the chip industry, including in hardware that enables advanced artificial intelligence, is essential for the United States to ensure its national security and economic prosperity.

“The U.S. government has underinvested in the chip industry for decades, leaving such investment to the private sector. As a result, we have lost our lead in some areas of hardware and semiconductors,” Goldsmith said. “The Chips and Science Act is a once-in-a-generation investment in hardware innovation and manufacturing, and those innovations will come from universities.”

Startups are one of several ways that university technologies can lead to societal benefit. To increase innovation impact, Princeton helps researchers who wish to launch companies or non-profits by providing information about how to find funding, how to evaluate customer need for a product, and help with applying for patents.

“To be a successful entrepreneur, you need more than just funding,” Goldsmith said. “You need an ecosystem including investors and advisers to help transform research innovations into successful products around which a company can be built.”

As part of this support system, Princeton began the Intellectual Property (IP) Accelerator Fund program ten years ago to provide extra funding for researchers to further develop their early-stage innovations. Verma’s research team received an IP Accelerator Fund award in 2019, which Verma said was critically enabling for the development of their technology.

“Providing funds to help faculty develop technologies is very important for the University,” Goldsmith said. “The cycle of taking the research that we do, maximizing its impact by putting it into practice, and in that process opening up new research areas and new methodologies of teaching maximizes the impact of the research that our faculty are doing.”

“People think innovation is about starting companies, but it’s not,” Goldsmith said. “It’s really about creating new ideas and going down paths that have not been followed before.”

This story originally appeared on the Office of the Dean for Research website.