By Scott Lyon

To keep up with the explosive demands of the modern Internet, data centers will need to process more power with greater efficiency in less space. Reducing the footprint of that demand lies at the heart of the matter for Ping Wang, graduate student in electrical engineering at Princeton, who was recently awarded a top prize at one of the world’s leading conferences on power electronics.

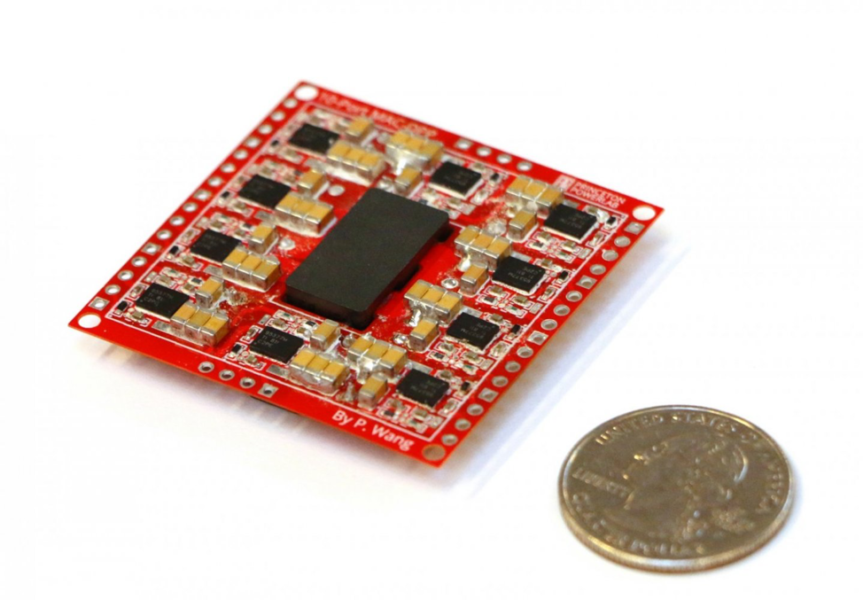

At the IEEE Energy Conversion Congress and Expo (ECCE), earlier this month, Wang and his colleague Jing Yuan were recognized for their new power-processing system. The device is eight times lighter and seven times smaller than conventional systems while substantially increasing efficiency in converting voltage to levels needed by computer components. If adopted, their system could run millions of normally operating hard drives with greater than 99.4 percent efficiency in voltage conversion (from 50 volts to five), an essential step in today’s high-performance computers. Industry standards top out at around 93 percent efficiency and usually perform much worse, according to the researchers.

“We hope this demonstration can help convince the industry there is a lot of opportunity for energy efficiency in future data centers,” said Minjie Chen, assistant professor of electrical engineering and the Andlinger Center for Energy and the Environment. Chen is Wang’s Ph.D. adviser and the principal investigator on the project. Yuan, a graduate student at Aalborg University in Denmark, is currently a visiting student research collaborator in Chen’s lab.

The key to the improvement lies in a nascent design approach that only processes part of the power — the power differential — at any given time. This differential power-processing approach has been explored sparingly in applications like photovoltaics and batteries, but not before in data-center systems. The researchers posted a video demonstration of the technology on YouTube, showing that it remains stable even in worst-case scenarios.

The advance could mark a major shift for data centers, which face exponential growth over the coming decade. Currently, these centers account for roughly two percent of global energy use. One estimate, published in the journal Nature last year, shows data centers’ electricity consumption could more than triple by 2030. The financial and environmental costs of such growth have sparked a push toward reducing the size and waste of specialized components such as step-down voltage converters. Smaller, more efficient systems allow companies like Amazon and Google to meet rising demand more cheaply and with smaller environmental impacts.

Chen likens data centers to the brain of our future society — processing, storing and distributing the world’s information. “We asked the question, ‘How do we power this brain in an efficient way?'” he said. “In my opinion, ours is one of the most promising answers.” Several top technology companies have expressed early interest in their developments, according to Chen.

Wang and Yuan were awarded the Best Student Project Demonstration on Emerging Technology First Prize at ECCE. Twenty-two teams from across the globe competed. The Princeton team’s project was titled “An Ultra-Efficient Differential Power Processing (DPP) System for Future HDD Storage Servers.” An earlier version of their technology won first place at the 2019 Princeton Innovation Forum. Wang will present the latest version at the Celebrate Princeton Innovation event in November.

Their work is funded in part by ARPA-E of the U.S. Department of Energy and the National Science Foundation.